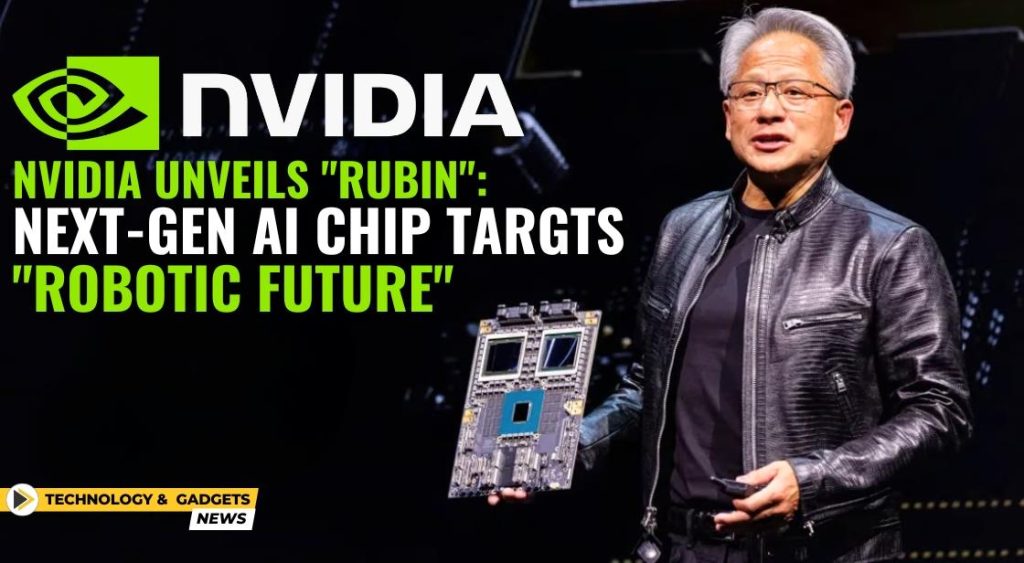

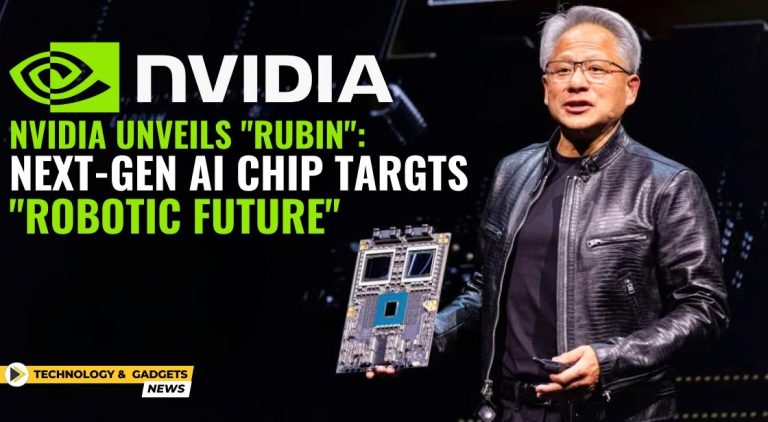

In March 2025, Nvidia, the powerhouse behind much of the world’s AI acceleration technology, announced its latest breakthrough: the Rubin AI Chip series. Officially unveiled at the highly anticipated GTC 2025 conference, the Rubin lineup—including the Blackwell Ultra and Vera Rubin chips—marks a significant leap forward for AI computing infrastructure. With increasing global demand for faster, more efficient, and sustainable AI processing, Nvidia’s new offerings arrive at a pivotal time.

This article delves into the technology, vision, and potential impacts of Nvidia’s Rubin series, showcasing why many experts believe this could define the next era of artificial intelligence.

A New Architecture for a New AI Age

The Rubin AI Chips aren’t just an incremental improvement over their predecessors—they represent a fundamental shift in design philosophy.

philosophy.

The Blackwell Ultra builds on the success of the Blackwell architecture released in 2024, but introduces substantial upgrades in tensor core performance, memory bandwidth, and energy efficiency. Designed specifically for hyperscale data centers, Blackwell Ultra offers unparalleled throughput for training and inference of large language models (LLMs), generative AI systems, and autonomous systems.

Meanwhile, the Vera Rubin chip, named after the pioneering astronomer Vera Rubin, focuses heavily on edge AI and robotics applications. It is optimized for real-time decision-making, low-latency environments, and highly parallel tasks like autonomous driving, manufacturing robotics, and AI-driven medical devices.

Together, these chips form a two-pronged strategy: supercharge the cloud while democratizing AI capabilities at the edge.

Key Features and Specifications

1. Next-Generation Tensor Cores

The Blackwell Ultra introduces 6th-generation tensor cores, dramatically improving operations like matrix multiplications central to deep learning. They support a wider range of precisions—including FP8, BF16, and the newly introduced UltraFP4 format—allowing for both extremely large model training and lightweight inference with high energy efficiency.

2. Unprecedented Memory Bandwidth

Memory has often been the bottleneck for large AI models. Blackwell Ultra addresses this by leveraging stacked HBM4e memory with up to 2.5 TB/s bandwidth per chip. This allows AI models with hundreds of billions of parameters to train significantly faster with reduced memory swapping.

3. Eco-Efficiency at the Core

Sustainability was a major design priority. Both chips offer up to 2.8x performance-per-watt improvements over their predecessors. Data centers, increasingly under scrutiny for their energy usage, can now achieve greater throughput without a proportional increase in power consumption.

4. Rubin Fabric: Seamless Chip-to-Chip Communication

One of the hidden revolutions in the Rubin architecture is the Rubin Fabric, a new high-speed, low-latency interconnect system that enables clusters of GPUs to operate as if they were a single massive processor. With speeds of up to 3 TB/s between chips, this fabric allows developers to train AI models that were previously infeasible due to interconnect bottlenecks.

5. Edge-Optimized Design with Vera Rubin

The Vera Rubin chip is small, highly efficient, and ruggedized. It supports real-time 3D vision, sensor fusion, and multi-agent reinforcement learning, making it perfect for autonomous machines operating in unpredictable environments.

Designed for the Demands of Tomorrow

The scale of AI is growing explosively. Models like GPT-5, Gemini Ultra, and future multimodal systems require orders of magnitude more compute than models from just a few years ago. Nvidia’s Rubin series is designed with this hypergrowth in mind.

Moreover, generative AI isn’t staying solely in the cloud anymore. Local AI agents, edge-based copilots, and autonomous robotics are quickly becoming reality. Vera Rubin ensures that these systems can function independently of massive cloud infrastructure, crucial for industries like healthcare, defense, and manufacturing.

Key Applications and Early Adopters

Several tech giants and AI labs have already announced plans to integrate Rubin-based systems into their operations:

-

OpenAI is rumored to be using Blackwell Ultra in its upcoming “Omni” multimodal AI.

-

Tesla plans to leverage Vera Rubin in its Full Self-Driving (FSD) AI training clusters and next-gen Optimus robots.

-

Meta and Microsoft Azure are among the first to deploy Rubin GPUs at hyperscale to support LLM-as-a-Service offerings.

In academia, Rubin-powered clusters are expected to be central to new research initiatives focusing on AI for drug discovery, climate modeling, and even fusion energy simulations.

How Rubin Compares to the Competition

Rubin’s main rivals are AMD’s Instinct MI400 series and Google’s custom TPU v6 chips.

-

Performance: Early benchmarks suggest Blackwell Ultra outpaces TPU v6 by about 20–30% in LLM training and 40% in multimodal (text + image + video) AI tasks.

-

Energy Efficiency: Rubin’s power efficiency, particularly in edge settings with Vera Rubin, currently leads the market.

-

Flexibility: Nvidia’s broad software stack—including CUDA, TensorRT, and the new NeMo framework for LLM training—makes adoption seamless compared to competitors.

Challenges Ahead

Despite its advantages, Nvidia faces challenges:

-

Supply Chain Pressure: Demand for advanced HBM4e memory and leading-edge fabs at TSMC is at an all-time high.

-

Cost: Rubin GPUs are expected to be extremely expensive, with estimates ranging from $40,000–$70,000 per unit for Blackwell Ultra.

-

Regulatory Scrutiny: Governments worldwide are examining AI chip exports and the concentration of AI hardware supply among a few firms, including Nvidia.

How Nvidia navigates these hurdles will determine just how dominant the Rubin generation becomes.

A Glimpse Into the Future

Jensen Huang, Nvidia’s charismatic CEO, summarized the company’s ambition during his GTC keynote:

“We are entering the Age of AI factories—where intelligence is produced at scale. Rubin is the engine powering this revolution.“

Nvidia’s Rubin AI chips do more than push the performance envelope—they lay the foundation for the decentralized, intelligent systems that will define the 2030s. From fully autonomous vehicles to AI medical assistants and climate simulation supercomputers, Rubin could very well be the hardware backbone of humanity’s next technological leap.

As AI rapidly evolves, one thing is clear: the future runs on silicon—and Nvidia’s Rubin is at the cutting edge.